In my previous post, I shared a frustrating encounter I had at the Starbucks drive-thru. The highlight? A barista who seemed personally offended that I wanted to use Apple Pay and then all but demanded a tip. It rubbed me the wrong way — and like I said, I usually tip! But attitude earns zero.

After writing it up, I thought a related image would really bring the post to life. Before AI image generation, finding a fitting photo was like a scavenger hunt across the internet. And even if I found something close, there was always the copyright cloud hanging overhead. Enter AI art tools — suddenly I could ask for exactly what I needed and have it generated in seconds. It’s not always perfect (hello, baristas with four arms or nightmare-fingers), but when it works, it really works.

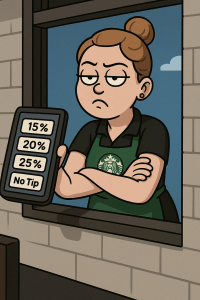

So I asked my AI tool to create a cartoon-style image of a barista at a drive-thru window, shoving a tip screen with an attitude. It delivered. But… something was off.

The character it gave me was a Black woman with a scowl.

Now, let me be clear — the race of the barista in my story wasn’t part of the issue. In fact, she was a white Eastern European woman. So when I saw the image, I couldn’t help but wonder: Was that just a random output… or something deeper?

Was this a glitch, or was I looking at something more unsettling — an AI model that had absorbed and regurgitated stereotypes like the “angry Black woman”? Had it unintentionally reflected a societal bias it had learned from the vast sea of human content it was trained on?

That realization bothered me. It wasn’t just about accuracy — it was about fairness. So I asked for the image to be redone with a white woman, thinking it would be a simple switch. But then the new images came back… off. Multiple arms. Distorted faces. Somehow, when asked to portray a white woman with the same level of exaggerated attitude, the system seemed to short-circuit.

Eventually, after a few rounds, I got something close to what I imagined:

Now, I’m not saying the AI is consciously biased (it doesn’t have a consciousness). But it is trained on human-created content — and that content carries all our messy, biased baggage. What we put in is what we get out.

So, was it a coincidence? Maybe. But maybe not.

As AI becomes more woven into our creative and social tools, we have to keep an eye on the assumptions it inherits. It’s powerful — and yes, incredibly helpful — but it’s not neutral. It learns from us, and sometimes it learns the worst of us.

Something to think about the next time we press “generate.”

Discover more from Tate Basildon

Subscribe to get the latest posts sent to your email.